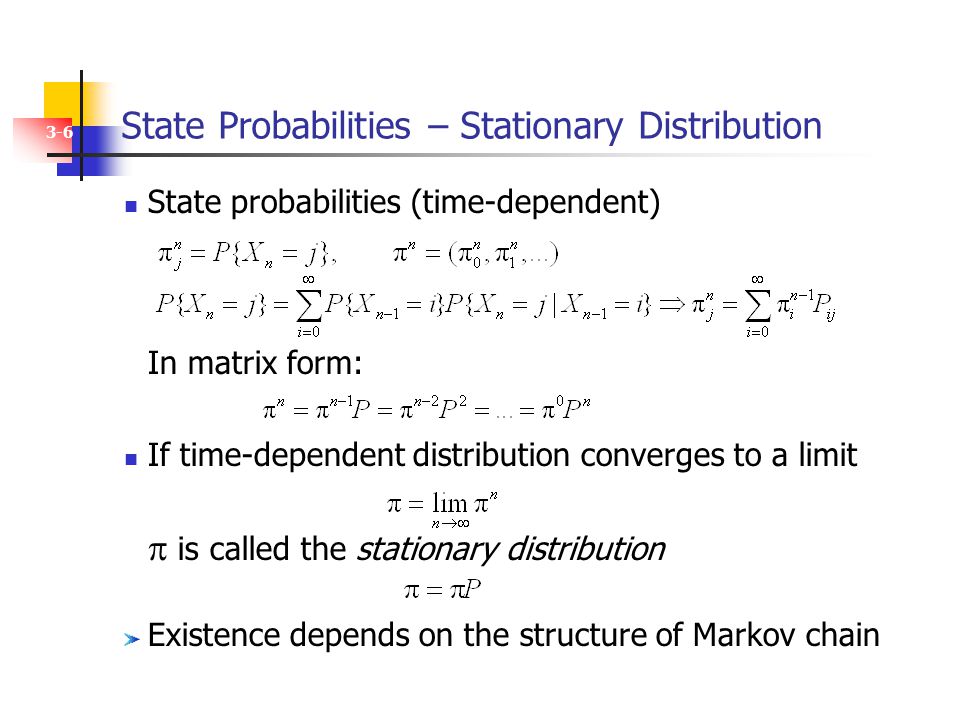

eigenvalue - Obtaining the stationary distribution for a Markov Chain using eigenvectors from large matrix in MATLAB - Stack Overflow

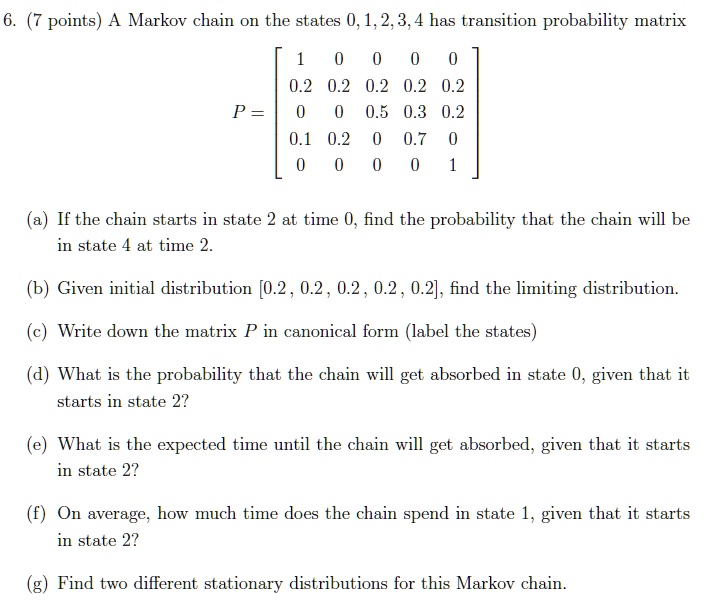

SOLVED: points) A Markov chain on the states 0,1,2,3,4 has transition probability matrix 0.2 0.2 0.2 0.2 0.2 0.5 0.3 0.2 0.1 0.2 0.7 P = If the chain starts in state

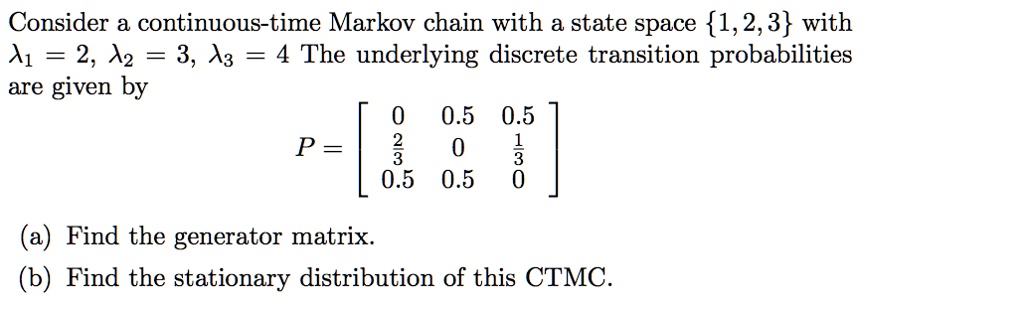

SOLVED: Consider continuous-time Markov chain with a state space 1,2,3 with A1 = 2, A2 = 3, A3 = 4 The underlying discrete transition probabilities are given by 0 0.5 0.5 P =

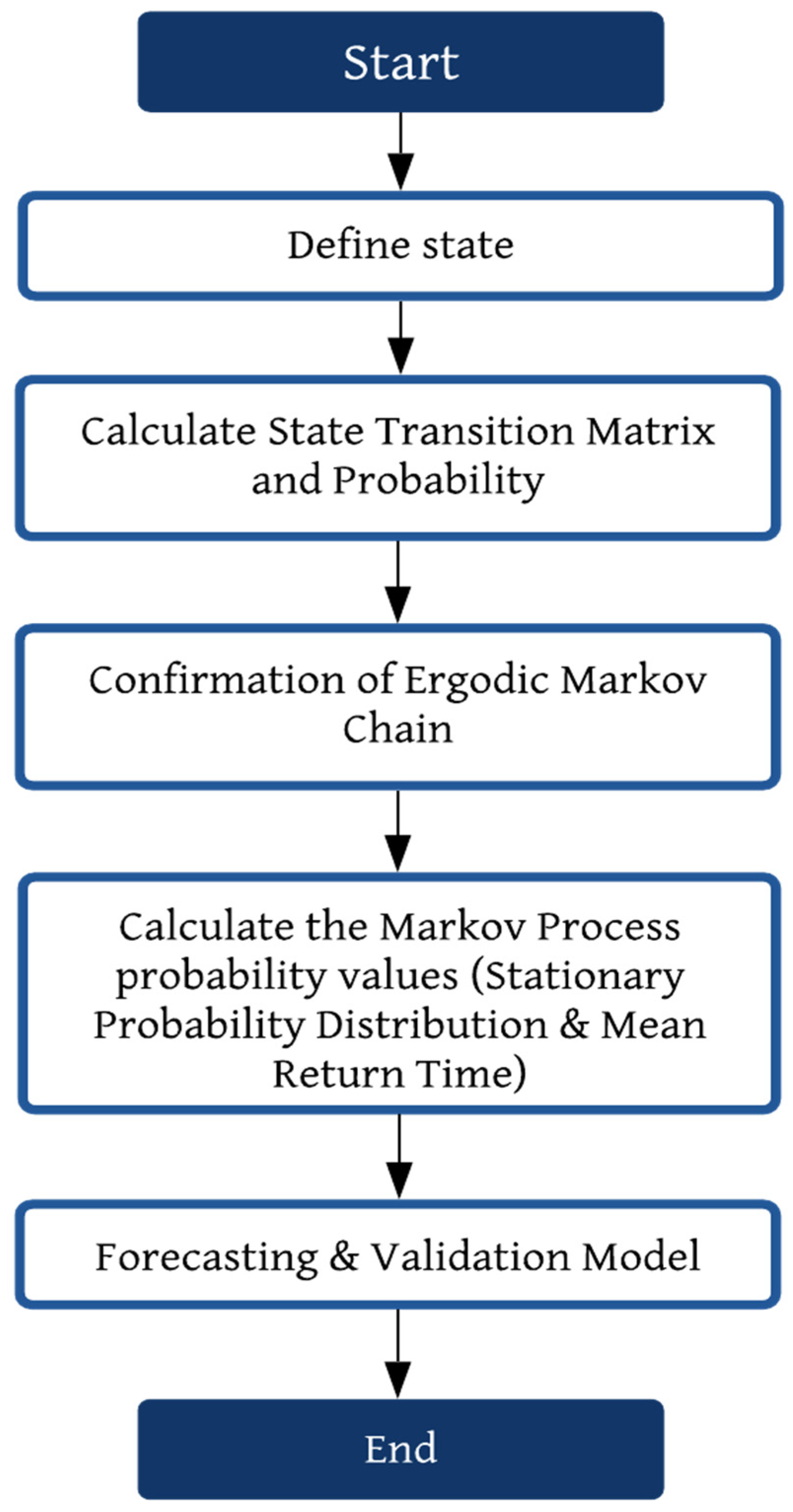

Sustainability | Free Full-Text | Markov Chain Model Development for Forecasting Air Pollution Index of Miri, Sarawak

SOLVED: (10 points) (Without Python Let ( Xm m0 be stationary discrete time Markov chain with state space S = 1,2,3,4 and transition matrix '1/3 1/2 1/6 1/2 1/8 1/4 1/8 1/4